No Accountability for Big Tech = Big Problem

When I take my kids to the movies, we depend on the MPAA rating system to tell my family what to expect. When listening to music, inappropriate content is tagged as having “explicit content.” Video game retailers refuse to stock games that have not been rated by the Entertainment Software Ratings Board (ESRB). These ratings systems are clearly understood, enforced, trustworthy, and exist to protect the innocence of my children.

It’s unbelievable to me that nothing like this exists for the apps kids use today.

Now, imagine for a moment taking your 13-year-old son to Captain Marvel, which is rated PG-13 due to action and science-fiction violence. Six seconds into the movie, you are confronted with 12 images on the screen ranging from sex toys, to intercourse, to oral sex. “Wait, I thought this was PG-13?” Another six seconds in and you are shown articles on the screen that describe orgies, anorexia, animal abuse, hooking up, and show links to Dropbox folders full of child pornography.

There would be an outcry. Parents would march in major cities. Congressional hearings would take place to find out what went wrong.

And yet, this is exactly what apps like Instagram and Snapchat make readily available to their users every single day (1). Apps that are rated 12+, enjoyed by millions of young people, and used by over a billion people every month (2) and no one is holding them accountable for their content.

No one.

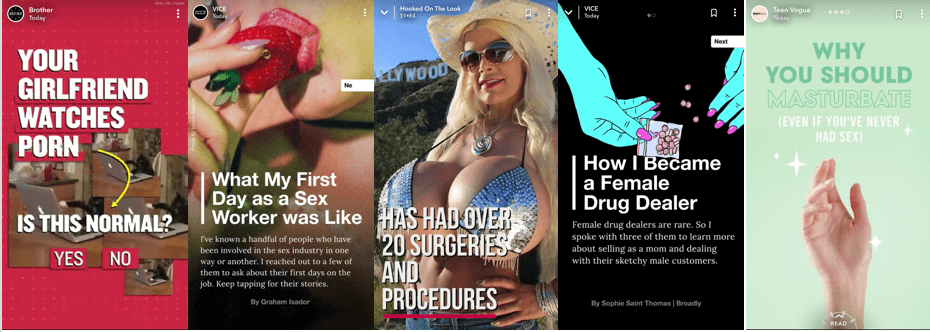

Different Year. Same Snapchat Discover.

In 2017, we wrote a viral piece about Snapchat’s Discover Section. In it, we took screenshots of Discover articles over five days. In November 2018, Snapchat told us they started blocking (“age-gating”) mature content from minors (those who have their real birthday set). We don’t think it works. Remember, Snapchat is rated 12+ in Apple’s Add Store.

These are just five screenshots from Snapchat Discover from March 2019, using an account with an age of 15.

Video Games Show us the Way (thank you, Mortal Kombat!)

Back in 1993, violence in Mortal Kombat caused a congressional hearing that determined video game companies were irresponsibly marketing mature content to minors (3). It was animated, cartoon violence that motivated lawmakers to change an industry.

Where’s the outcry today? Why isn’t more being done to protect our young people? Since when is hardcore pornography (4), violence, terrorist acts (the recent live stream of the New Zealand shooting), graphic self-harm (7), animal abuse (5), child pornography (8), and hatred acceptable for seventh graders in an app rated 12+? Why does Apple tell parents that their child’s favorite social media platform is appropriate for 12-year-olds, yet the Children’s Online Privacy and Protection Act (COPPA) requires all users to be at least age 13? Why do different mobile app stores rate apps differently?

All of this furthers my belief that the current app rating system is broken. At times, the information provided by Apple and Google is so inaccurate that it borders on deceptive. The lack of social responsibility displayed by social media developers is shocking.

It’s time for change. The Fix App Ratings Movement is calling for two actions:

It’s time for change. The Fix App Ratings Movement is calling for two actions:

- The creation of an independent app ratings board with the power to impose sanctions for non-compliance.

- The release of intuitive parental controls for iOS, Android, and Chrome operating systems.

I believe that if done properly, these two steps would give more parents what they need to make informed decisions about the appropriateness of the digital places where their kids spend time.

Some will object, saying parents are the ones to blame. After all, they’re the ones paying for the service and providing access to the devices.

Let’s be clear. Parents are absolutely the first shapers of their children when it comes to responsible use of technology. But parents cannot control all of the digital doorways that influence their children. Large technology companies have a social responsibility – a “duty of care” – to abide by a reasonable and consistent set of standards and provide intuitive controls so that the parents who care can guide and protect their kids in the digital age.

Ruth Moss from the UK’s National Society for the Prevention of Cruelty to Children said,

“And I’ve often heard people say, ‘But it’s the parent’s responsibility to keep their children safe online’, and yes it absolutely is, parents need to do as much as they can, but my message today is parents cannot do that on their own because the internet is too ubiquitous and it’s too difficult to control, it’s become a giant.”

Two actions. For our children, let’s reach across the aisle and get this done.

For more information, please visit fixappratings.com

References:

-

McKenna, Chris. (January 1, 2019). Snapchat’s Brother Channel Tells Kids: Watch Porn, Masturbate, and Always Use Incognito Mode [Blog post]. Retrieved from: https://wptemp.protectyoungeyes.com/snapchat-brother-channel-tells-kids-watch-porn/.

-

Constine, John. (June 2018). Instagram Hits a Billion Monthly Users, up from 800M in September. [Blog post]. Retrieved from: https://techcrunch.com/2018/06/20/instagram-1-billion-users/.

-

Kohler, Chris. (July 29, 2009). July 29, 1994: Videogame Makers Propose Ratings Board to Congress [Blog post]. Retrieved from: https://www.wired.com/2009/07/dayintech-0729/.

-

McKenna, Chris. (August 11, 2018). Instagram Porn is Worse than you Think. It’s Time for Action [Blog post]. Retrieved from: https://wptemp.protectyoungeyes.com/instagram-porn-worse-time-for-action-parental-controls-needed/.

-

Gentry, Mason. (January 9, 2018). Instagram has an Animal Abuse Problem [Blog post]. Retrieved from: https://medium.com/@mtgentry81/instagram-has-an-animal-abuse-problem-ff59027142.

-

Dines, Gail. (January 29, 2019). What kids aren’t telling parents about porn on social media [Blog post]. Retrieved from: https://www.bostonglobe.com/magazine/2019/01/29/what-kids-aren-telling-parents-about-porn-instagram-and-snapchat/z12WPJFUGYt0vm1fDOndDP/story.html.

-

McKenna, Chris. (February 2, 2019). What is Suicide Porn? Instagram Blamed in Young Teen’s Death. [Blog post]. Retrieved from: https://wptemp.protectyoungeyes.com/what-is-suicide-porn-instagram/.

-

Lorenz, Taylor. (January 8, 2019).Teens are Spamming Instagram to Fight an Apparent Network of Child Porn. [Blog Post]. Retrieved from: https://www.theatlantic.com/technology/archive/2019/01/meme-accounts-are-fighting-child-porn-instagram/579730/.