Pedophiles trade Child Porn through Dropbox Links on Instagram

(Updated March 2, 2021) The Atlantic first reported that teenagers stumbled upon a network of Instagram accounts that were sharing Dropbox links of child porn (Atlantic article). The way it worked is that pedophiles were using certain hashtags on images that advertised how to get in touch. Teens discovered this and proceeded to spam the offending hashtags with hundreds of memes, making it difficult for pedophiles to find each other and trade illegal content.

Brilliant. Kids defending other kids!

And, although it was an admirable diversion, unfortunately these criminals are resourceful. And, with over a billion monthly users, it’s impossible for Instagram to keep pace with nefarious activity.

Maybe your kid already uses Instagram. Great! I’m not saying you need to rip it away. In fact, that is often counterproductive. Instead, we hope this post will help you better understand that the way the app is designed creates risks.

Because remember, not all kids using Instagram end up being groomed and abused.

But, if grooming and child exploitation are easy on the app, my guess if you would want to know. Even CNN recently reported that Instagram is the #1 app for child grooming.

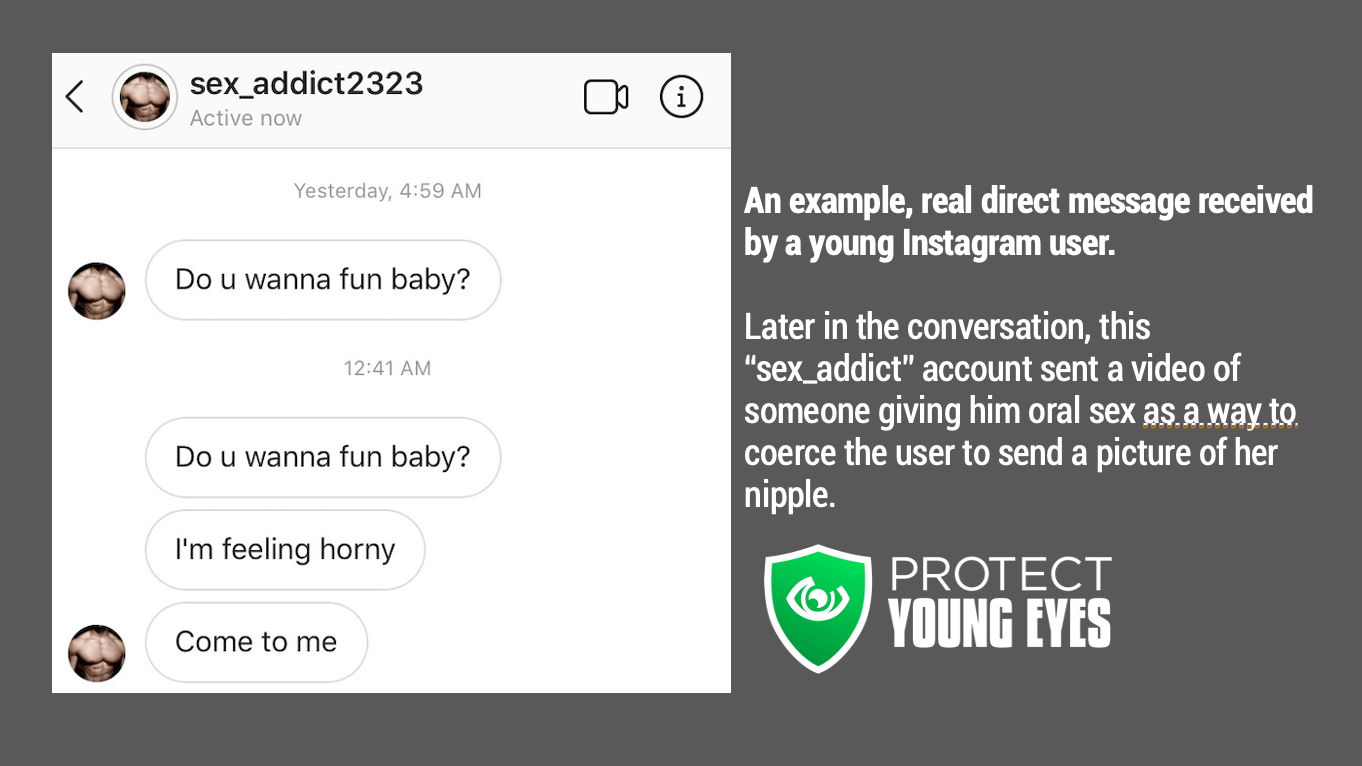

If your son or daughter receives a private, DM (direct message) from a stranger, does he/she know how to respond? It’s easier to do than you think. Remember, wherever the kids are is where the predators are.

We simply want this post to flash a light in dark places. Since Apple’s App Store Description doesn’t say anything about predatory activity, it’s our job to tell the truth.

**Warning. Some of the screenshots you will see in this post are not safe for work (NSFW) and include some of the most disturbing content we’ve ever encountered during over four years of researching social media. Nothing has been censored.

Four Grooming Paths on Instagram – Comments, Hashtags, Likes, and DMs

If Instagram leadership reads this post, they’ll try really hard to point to their community guidelines and their reporting channels, saying that they don’t allow predatory activity. But we would argue that the very way in which Instagram is designed creates grooming pathways. In other words – no amount of moderation or guidelines can change Instagram’s features. Allow us to explain.

Oh, and one more thing. Many parents who read this might think, “my child has a private account, so they’re fine.” That’s a common, but incorrect conclusion. None of the four feature issues we discuss below are impacted in any way by the privacy of an account. Anyone, whether private or not, can post comments and search hashtags, and anyone can be seen through the like count and sent a message via DM.

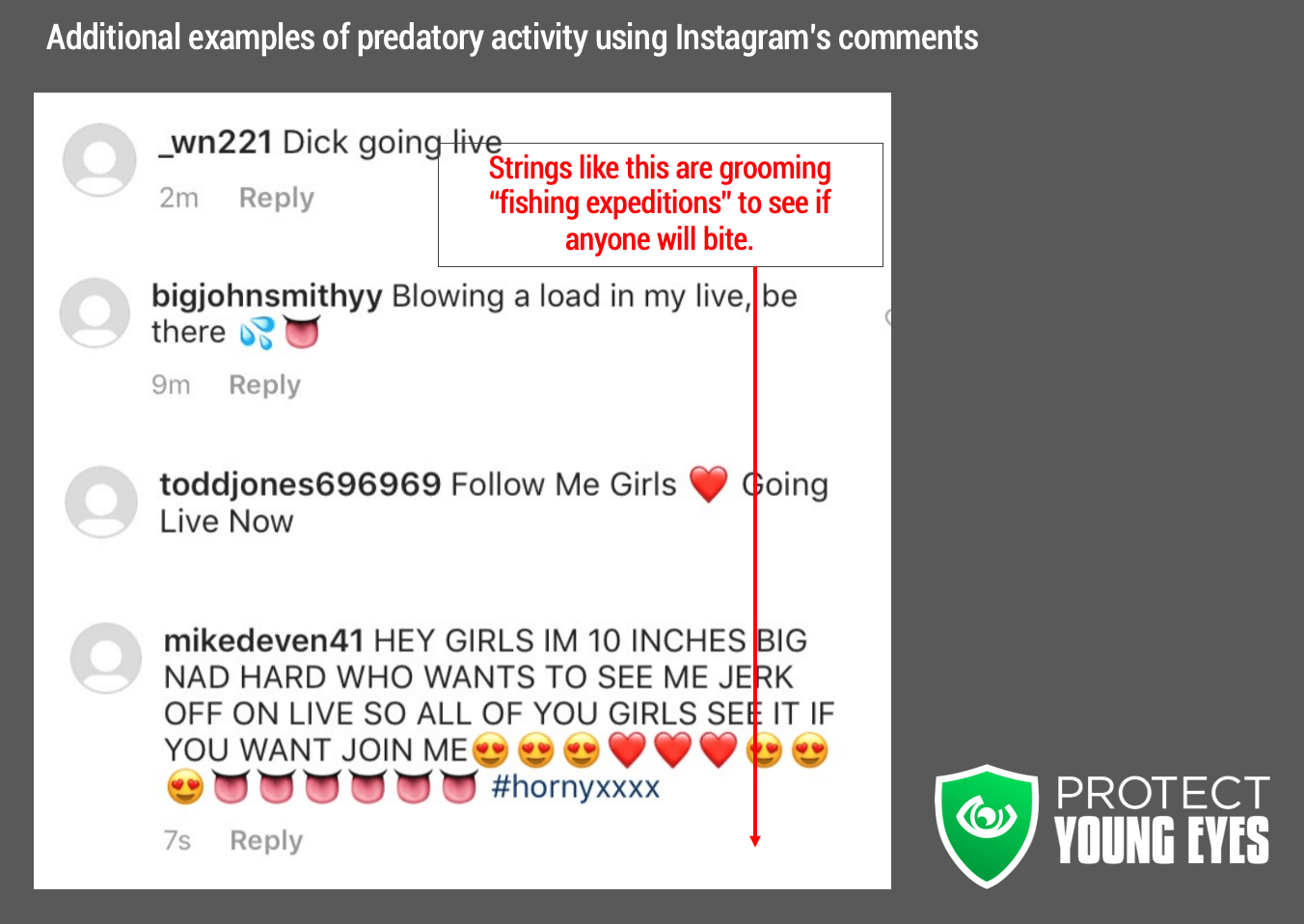

Pedophiles exploit Instagram’s comments to network with each other and fish for victims.

Within the comments, pedophiles find other pedophiles and peddle their illegal and disgusting content with each other. Here are a few samples from an endless number of comments (warning – these comments are extremely disturbing)

You also see comments that go directly at young people as a form of “fishing” for victims, waiting for a kid to bite.

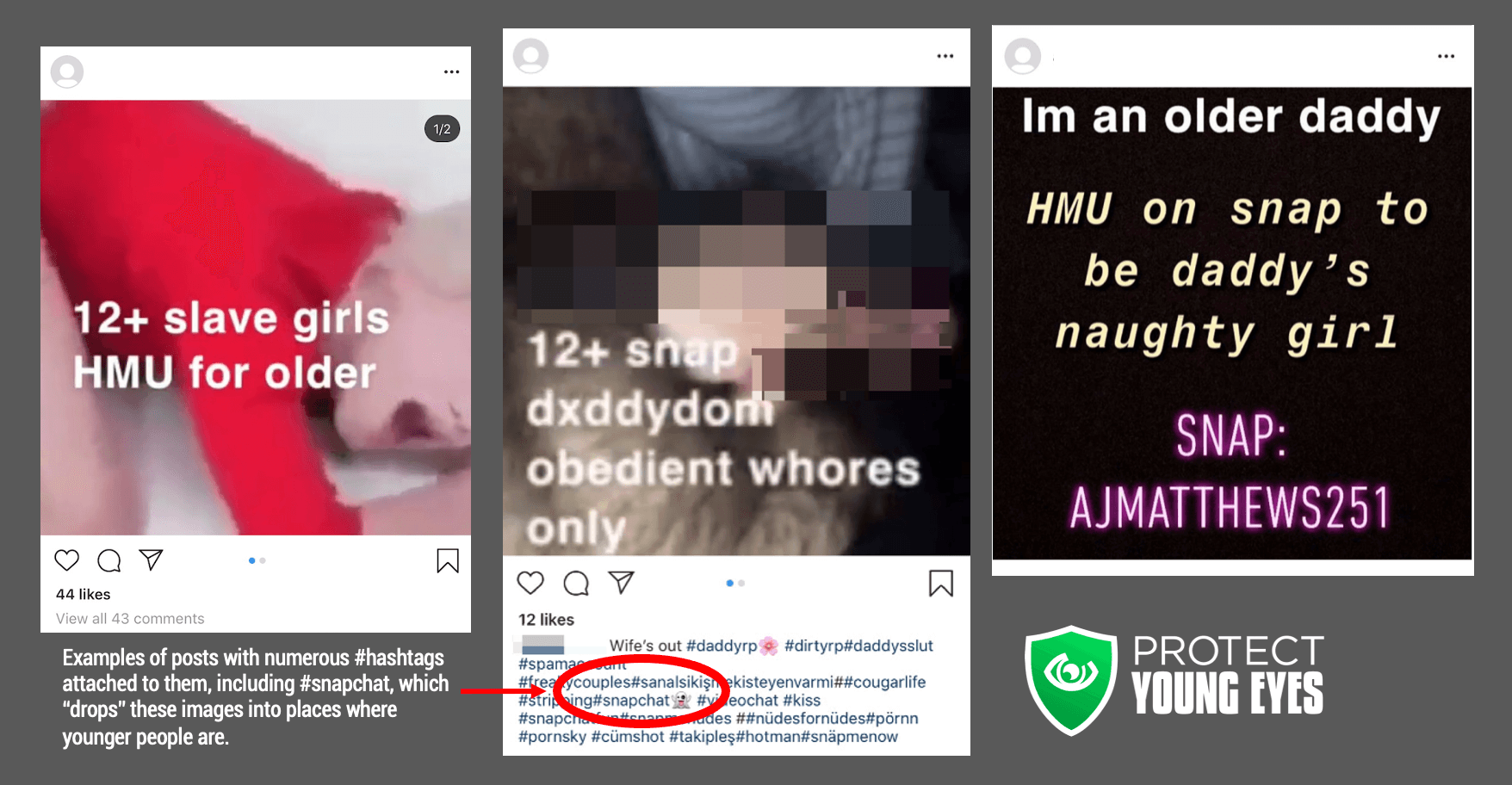

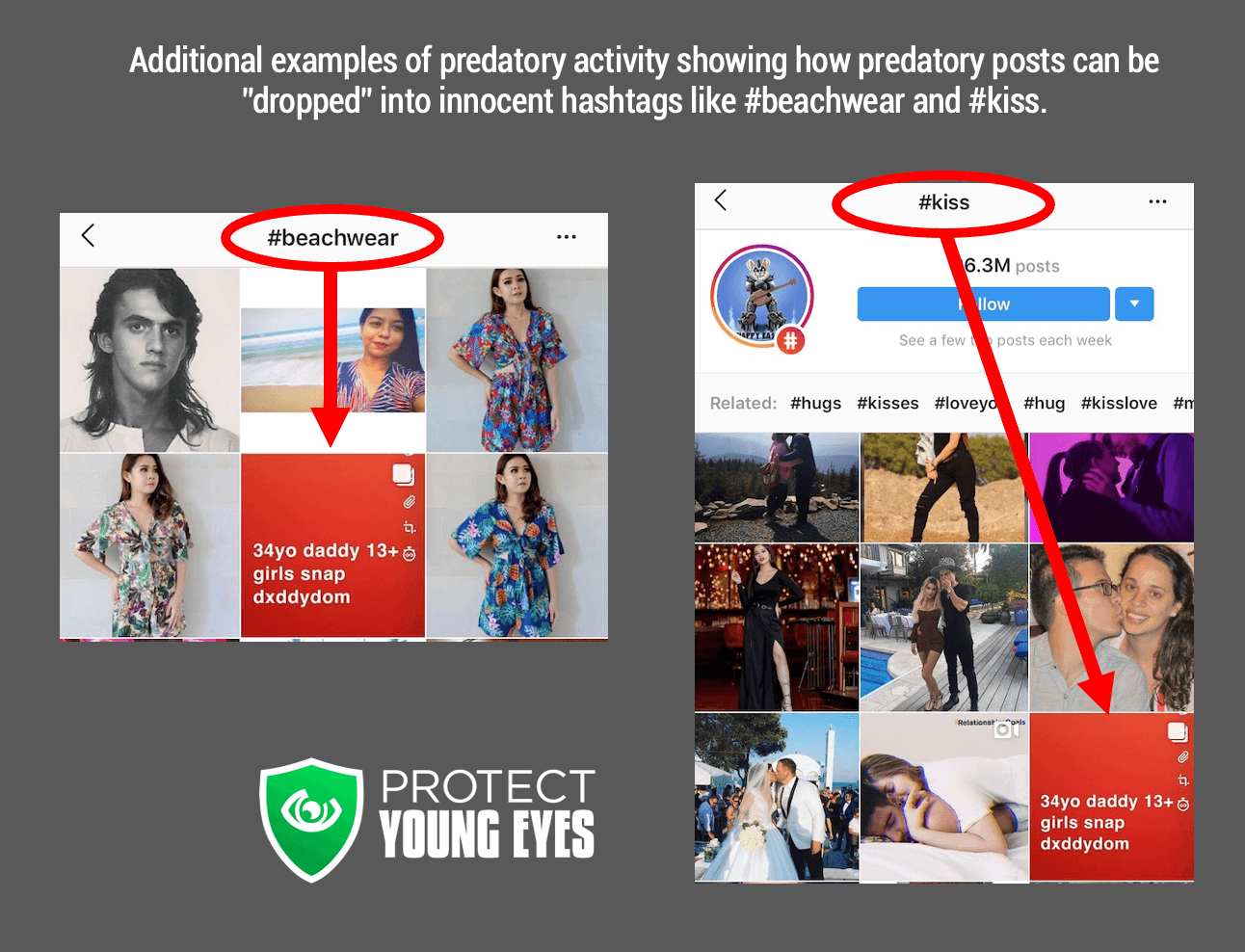

Pedophiles exploit Instagram’s hashtags to drop horrible content into good, clean places.

Almost all social media platforms use #hashtags. Think of them as a card catalogue for social media content – a way to categorize millions and millions of images into groups so that I can find exactly what I’m looking for. We love them! Some people use them as a sort of witty, second language.

But the problem is that they can be used by anyone.

Let’s say for a minute that I’m a teen girl who’s interested in modeling. Or cheerleading. And my mom even made me have a private Instagram account (good job, mom!).

I take a photo at the beach with my friends, and I attach the hashtags #teen #teengirl #teenmodel #snapchat. Fabulous. Later on, with my girlfriends, I’m thumbing through the #teenmodel and #snapchat hashtags, and I see this:

See, any predator can attach #teenmodel and #snapchat to their photo. This allows that photo to show up in front of millions of teen girls, thumbing through #snapchat photos, hoping one will “bite.”

Notice in the one photo how part of the “sell” is to convince a girl to join him in Snapchat, which is a very secure environment for secretive activity. After all, >75% of teens have Instagram and >76% (AP Article) of teens have Snapchat, so there’s a good chance that if a kid has one, then they probably have the other.

In other words, #hashtags allow predators to hover over good places like a drone and drop their smut whenever they want. Pay attention to those screenshots – there’s nothing pornographic about them. There’s no swear words. No use of “sex.” But, the very nature of #hashtags as a feature create this grooming path.

And if someone reports the “daddy” posts you see above and Instagram takes them down, no problem. Since Instagram doesn’t require any identity verification, including birthday, real email, credit card, NOTHING, a predator can create another fake account in seconds. This is yet another huge design flaw that creates a situation where pedophiles don’t mind taking great risks and getting shut down – their attitude is, “I’ll just start over.”

[Note: we experienced this with “daddy,” who we reported multiple times. His account would be shut down, and then he popped up with a slightly different username seconds later, posting the same horrifying images of him masturbating and asking kids to connect with him “live.”]

Related post: We Tested Instagram’s “No Nudity” Rule. We Can’t Show You the Results.

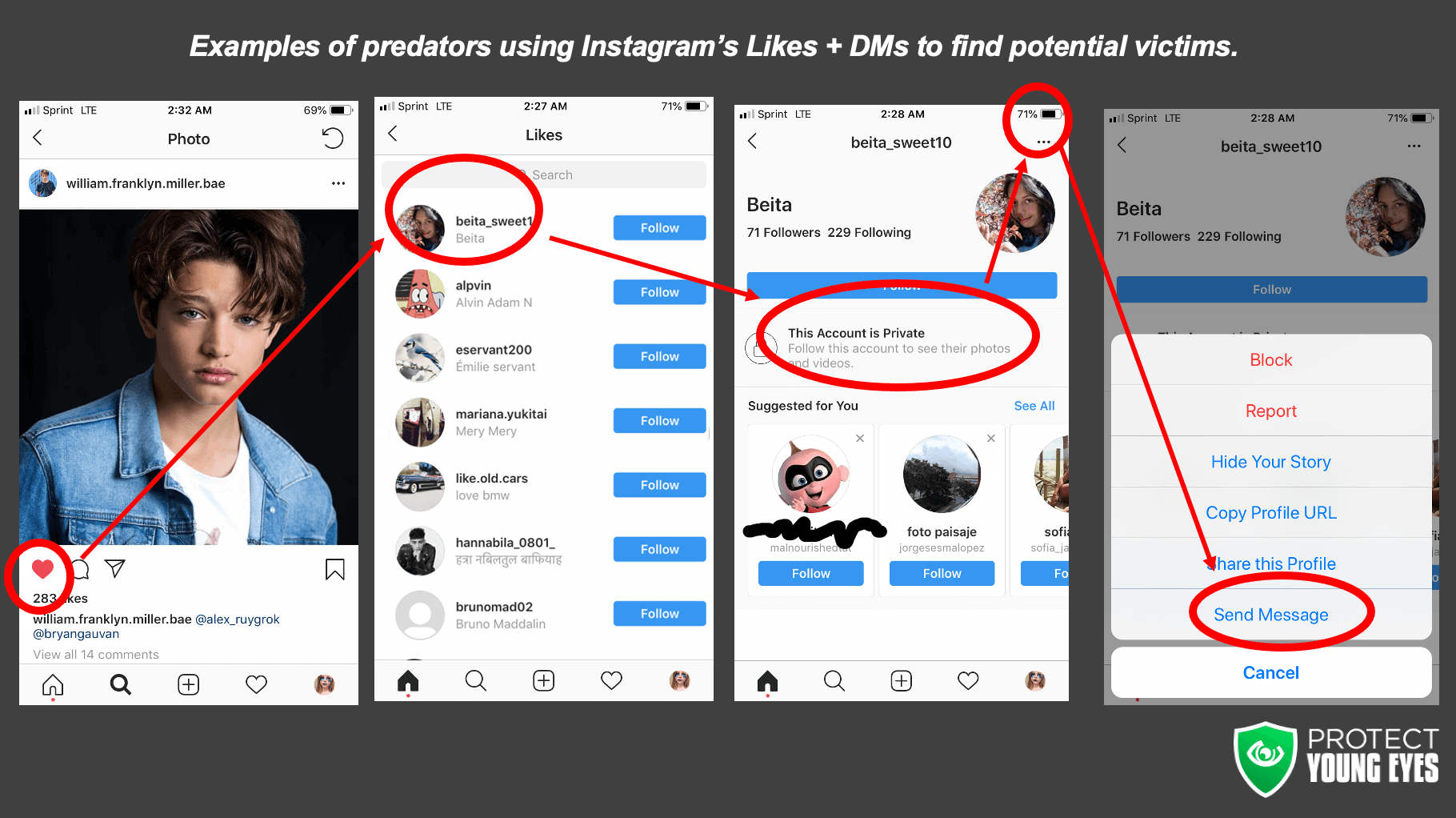

Predators exploit Instagram’s likes (the heart) to identify potential victims.

Going back to our #teenmodel example, if you click on one photo, you might find that it has hundreds of likes (hearts) similar to the photo of the young boy below (sorry, but if you don’t want your photo in blog posts, then keep your account private).

Predators can click on the likes and see everyone who has liked this photo. Everyone. Even if they have a private account. From that list, a predator can identify someone young who looks interesting and send him/her a direct message (DM) – we’ll explain the whole DM feature in more detail next. But, note how the “likes” feature creates a target audience for sexual predators. This is shown in the image below.

Again, it’s a design flaw. The very nature of the likes feature creates a pool of young people for predators to target (to Instagram’s credit, they are considering dropping the “like” count attached to photos, but so far, this has only been speculated).

Which leads us to DMs. Direct Messages.

Pedophiles exploit Instagram DMs (direct messages) to groom kids. And they’re doing it very successfully.

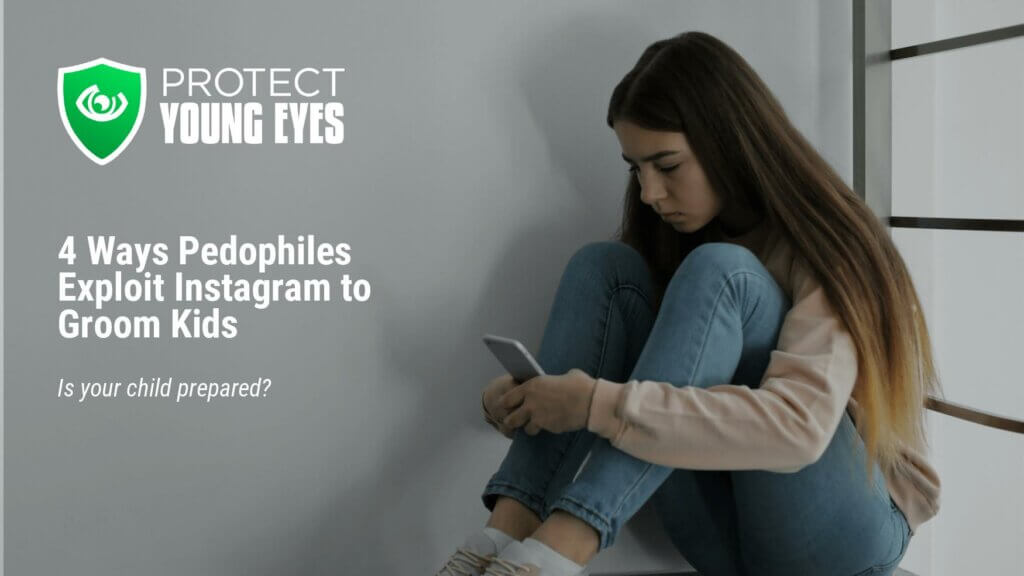

Two weeks ago, PYE created a test Instagram account. This account was clearly for a young girl, who posted two selfies on the first day of existence. Tagged on these photos were hashtags #teen, #teengirl, #teenmodel. This account went out and “liked” a few photos with similar hashtags and followed accounts that were like mine.

Not much happened for the first six days of the account.

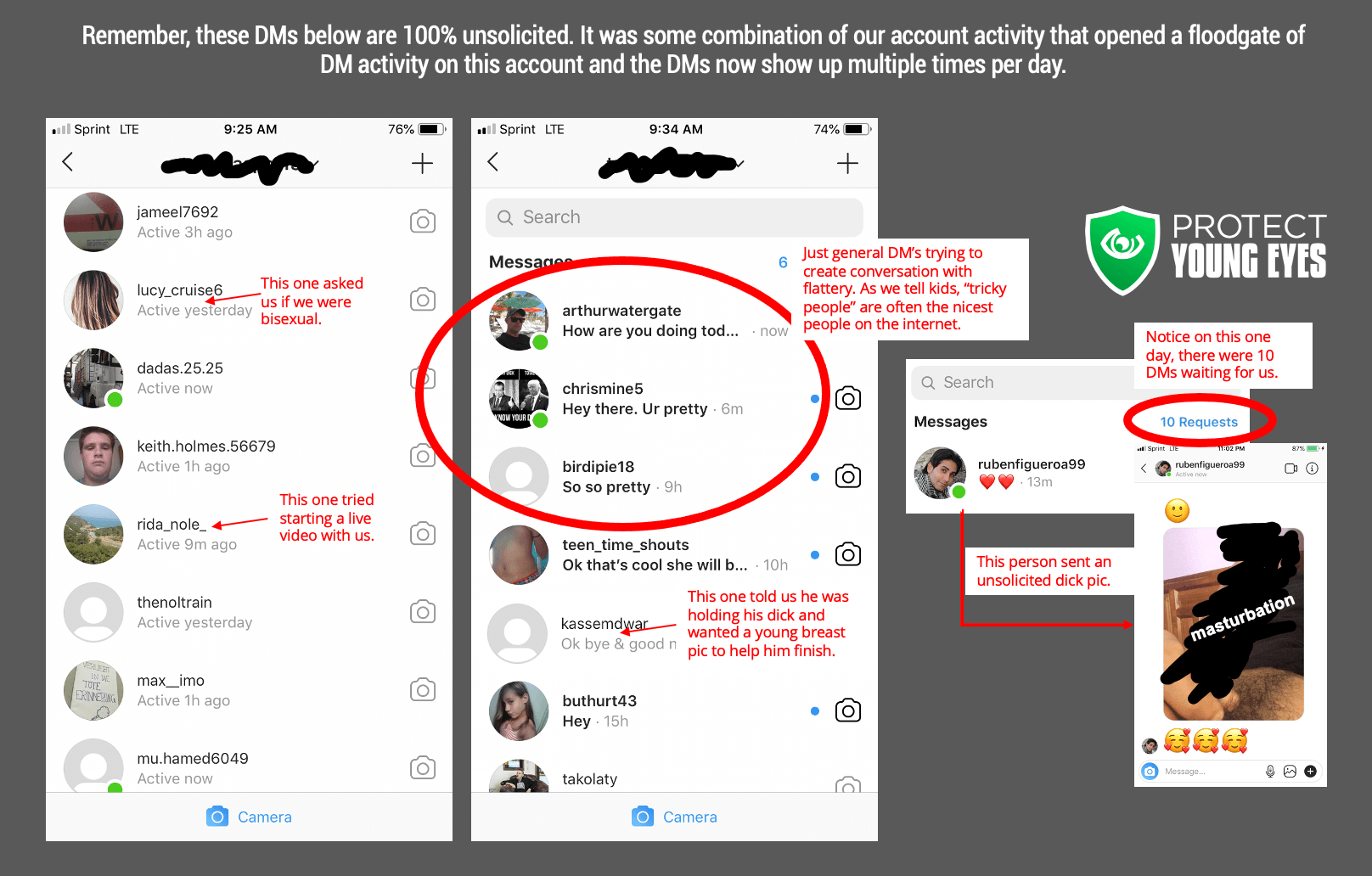

Then, one week later, something in Instagram’s algorithm triggered. It was as if some combination of the test account’s activity unleashed a tsunami of DM activity that hasn’t let up over the past four days, averaging over 10 DMs per day. The screenshots below show some of the activity, including a very creative porn link. Note – PYE is the one who scribbled out the man masturbating in the image below. The photo was sent to our test account as a DM, completely exposed.

Can Instagram Fix their Predator Problem?

Maybe. In order to clean up the issues above, Instagram would have to significantly alter numerous, core features. If Instagram were to create a “Safe Mode,” it might have to:

- Remove the ability to DM to or with anyone who isn’t an approved follower (Updated October 2020 – users can finally stop DMs from people they aren’t following! (instructions here) That only took 10 years!)

- Allow parents to create a whitelisted set of contacts. That means the child can ONLY like, comment, and DM with people who are on the whitelist.

- Remove the ability to add hashtags.

What Can Parents do About the Instagram Pedophile Problem?

1. If your kid uses social media, including Instagram, be curious and involved. Remember, not every kid misuses these platforms. But, if you know the risks, then get involved and talk openly with your children about how they’re using the app.

2. Use monitoring tools like Bark (7-days free!) and Covenant Eyes (30-days free!) to monitor their smartphone social media and texting activity. Bark actually monitors images within the app for appropriateness and alerts parents when kids venture into inappropriate images.

3. Talk to your kids specifically about direct messages and give them guidance for what to do if someone tricky reaches out to them. Be sure to TURN OFF DMs from people you aren’t following. This is a must for parents and kids! Instructions for doing that are in this post.

—————————————————->

Parents, we love BARK and how it helps parents AND kids. Here’s a real story…

“We knew our son was having some issues with school and in his social circle but he doesn’t talk to us about anything…he googled “What is it called when there’s a war going on inside your brain?”…The fact that he used the word “war” prompted BARK to mark it as violence…Call it depression or anxiety or regular mood swings teens experience, he wasn’t opening up to anyone about this and never mentioned it…I have a psych evaluation setup for him in a few days and I just have to say how grateful I am that BARK caught this. I would otherwise have no idea that this was even an issue for him and we can now get some professional help to ensure that it doesn’t become a true problem.”

*Note – links in this post might connect to affiliates who we know and trust. We might earn a small commission if you decide to purchase their services. This costs you nothing! We only recommend what we’ve tested on our own families. Enjoy!